One of the most important steps in web application testing or bug bounty hunting is enumeration. Enumeration is the first attack on target network. It is the process of actively or passively collecting information about the target. The more information we collect about the target, the easier it becomes to exploit the target in further steps. Although web enumeration holds so much importance in testing, it is one of the most neglected steps by many pen-testers. In this article we will cover the basic methodology for web enumeration.

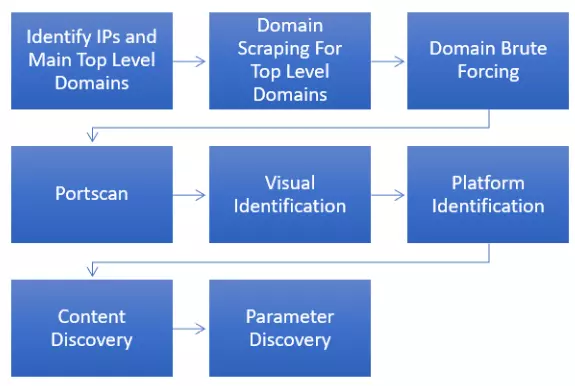

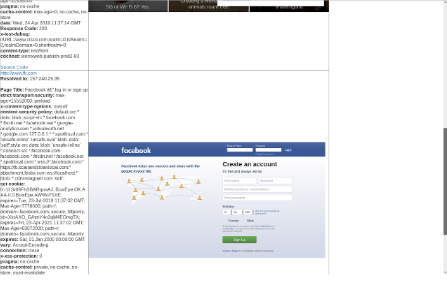

The Diagram below shows the steps followed by many top-level bug bounty hunters and web application testers

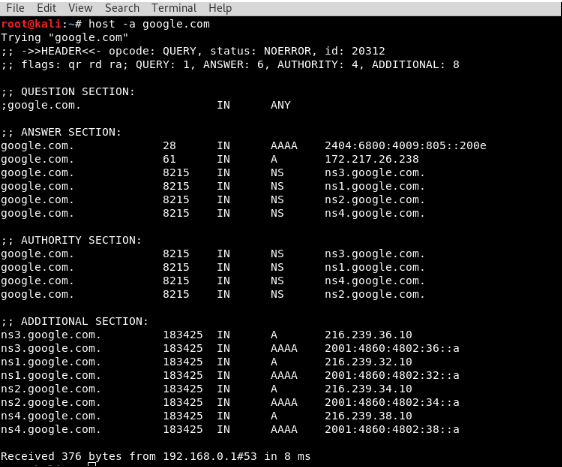

Identifying the IP addresses and the main domain servers of the target is the initial step of enumeration. A built-in tool for this is available in kali. The syntax is shown below:

A subdomain is, as the name would suggest, an additional section of your main domain name. In most cases, the main domain of a website is tested on by many pen-testers and the subdomains are left untouched. Due to this, many critical vulnerabilities are left un-exploited on these sub-domains. Also, many times, developers leave some private subdomains publicly available. These subdomains can contain useful information regarding the target or organization. Due to this reason it is always useful to find as many subdomains of the target as possible.

Basically there are two ways of evaluating the subdomains

Scraping

Scraping is a passive reconnaissance technique whereby one uses external services and sources to gather subdomains belonging to a specific host. Some search services index subdomains that have been crawled in the past, allowing you to collect and sort the results quickly without much effort. A few useful tools for this are listed below:

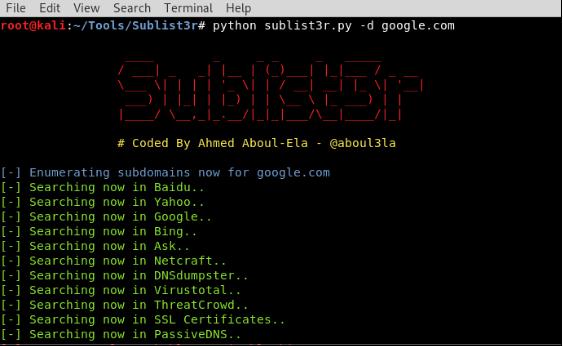

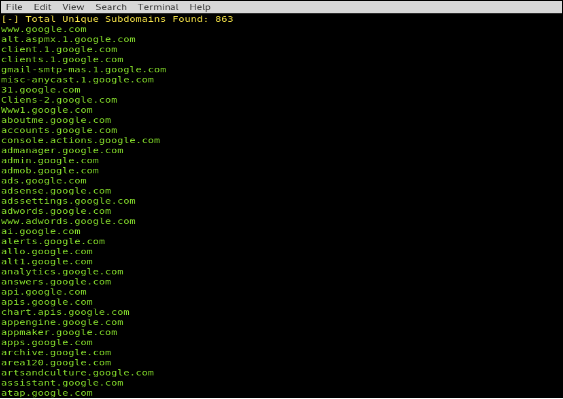

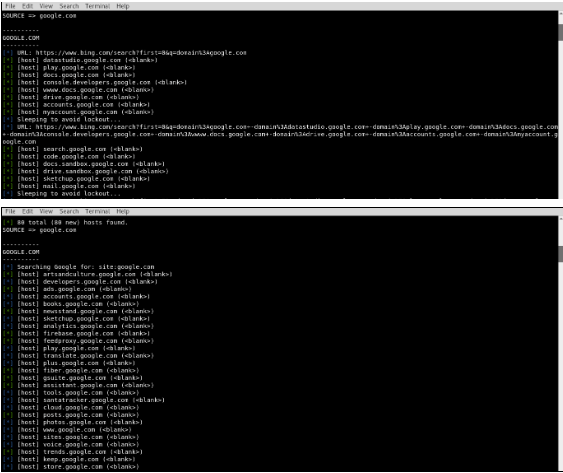

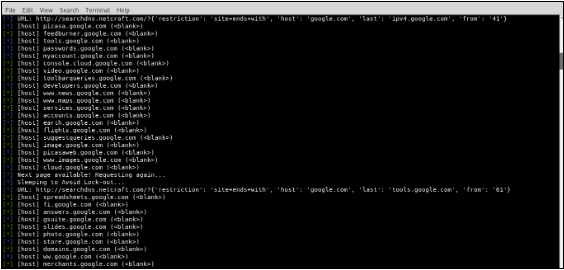

1. Sublist3r

Sublist3r is a python tool designed to enumerate subdomains of websites using OSINT. It helps us collect and gather subdomains. It uses many search engines such as google, yahoo etc. It also enumerates using Netcraft, Virustotal, ReverseDNS, DNSdumpster and Threatcrowd.

Download: https://github.com/aboul3la/Sublist3r

The syntax for sublist3r is shown below:

2. Enumall

Enumall is a tool used to enumerate subdomains of websites, developed by Jason Haddix who is the Head of Trust and Security of Bugcrowd. Uses google scraping, bing scraping, baidu scraping, yahoo scraping, netcraft, and bruteforces to find subdomains. It combines other tools like Recon-ng and Alt-DNS. This tool can also run multiple domains within the same session.

Download: https://github.com/jhaddix/domain

The syntax for enumall is given below:

Sub Bruting

Sub bruting iterates through a wordlist and based on the responses can determine whether the host is valid or not. One can also create their own personal wordlist with terms that they may have come across in the past or that are commonly linked to services interested in. A few useful tools for this are listed below:

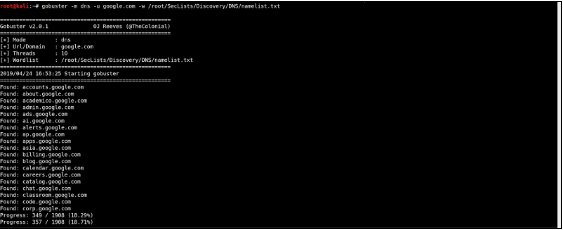

1. Gobuster dns

Gobuster is a tool used for bruteforcing DNS subdomains with wildcard support. This DNS mode will search for subdomains using a given wordlist. The syntax for gobuster dns mode is given below:

Download: https://github.com/OJ/gobuster

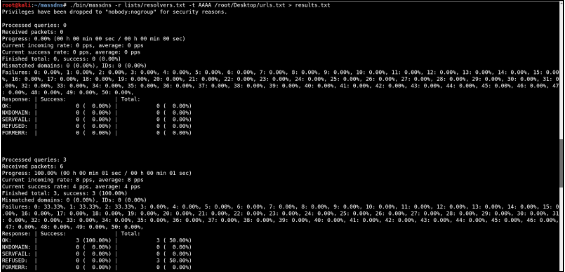

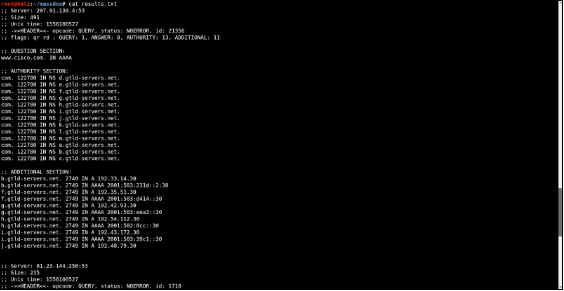

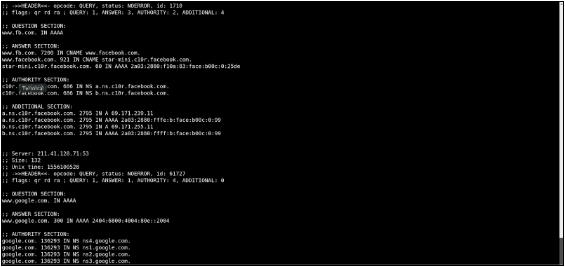

2. Massdns

MassDNS is a simple high-performance DNS stub resolver targeting those who seek to resolve a massive amount of domain names in the order of millions or even billions. Without special configuration, MassDNS is capable of resolving over 350,000 names per second using publicly available resolvers.

Download: https://github.com/blechschmidt/massdns

The Syntax for use is:

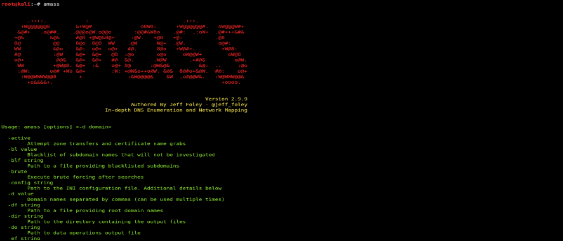

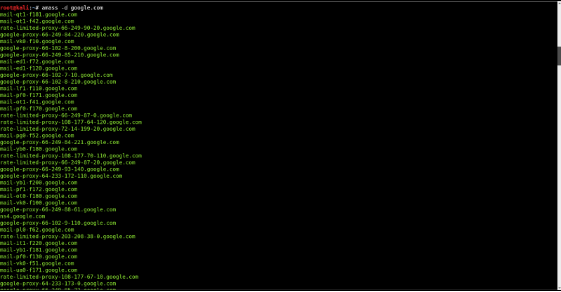

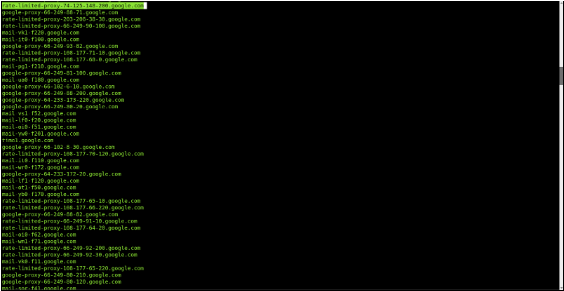

3. Amass

The OWASP Amass tool suite obtains subdomain names by scraping data sources, recursive brute forcing, crawling web archives, permuting/altering names and reverse DNS sweeping.

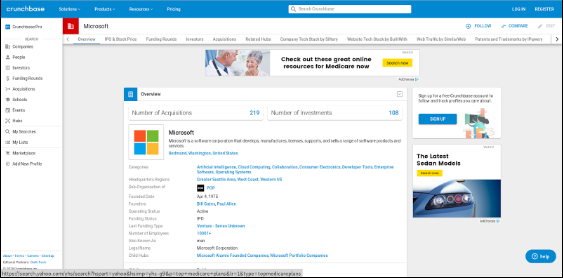

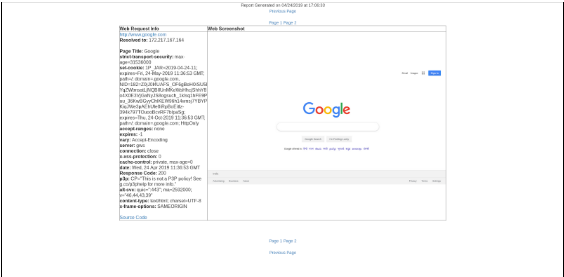

Many large companies like Microsoft acquire smaller companies and startups very frequently. These company websites are also included in the parent company’s bug bounty program. To get information about acquisitions we can either use Wikipedia or a site known as Crunchbase. Crunchbase is a platform for finding business information about private and public companies. Crunchbase information includes investments and funding information, founding members and individuals in leadership positions, mergers and acquisitions, news, and industry trends. The images below show results from both the sites.

Port scanning is used to determine what ports a system may be listening on. This will help an attacker to determine what services may be running on the system. According to the services being run, the attacker might find ways to exploit vulnerabilities of the system and gain unauthorized access.

A few useful tools for port scanning are listed below

1. NMap

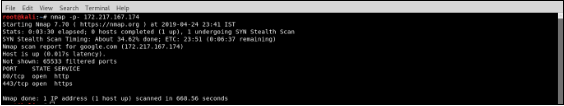

Nmap (Network Mapper) is an open source tool for network exploration and security auditing. It scans large networks, although it works fine against single hosts. Nmap uses raw IP packets to determine what hosts are available on the network, what services like application name and version those hosts are offering, what operating systems (and OS versions) they are running.

The output from Nmap is a list of scanned targets, with information on each depending on the options used. Key among that information is the “interesting ports table”. It lists the port number and protocol, service name, and state. The state is either open, filtered, closed, or unfiltered. Open means that an application on the target machine is listening for connections/packets on that port. Filtered means that a firewall, filter, or other network obstacle is blocking the port so that Nmap cannot tell whether it is open or closed. Closed ports have no application listening on them, though they could open up at any time.

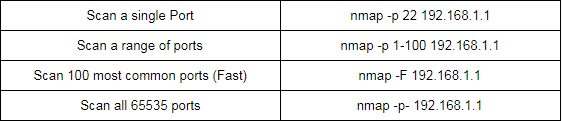

Nmap commands for port selection:

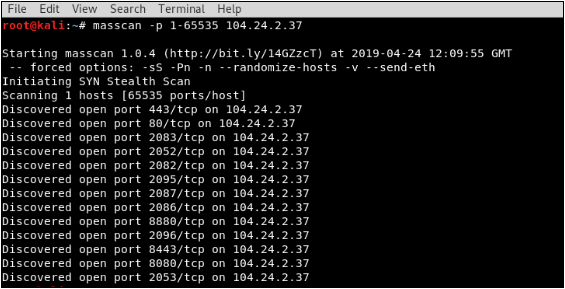

2. masscan

masscan is an Internet-scale port scanner, useful for large scale surveys of the Internet, or of internal networks. While the default transmit rate is only 100 packets/second, it can go as fast as 25 million packets/second, a rate sufficient to scan the Internet in approximately 3 minutes for one port.

The syntax for use is:

Visual Identification is an important step to categorize our targets. It helps us understand what web applications are running on the different subdomains and decide which targets may be easy to exploit.

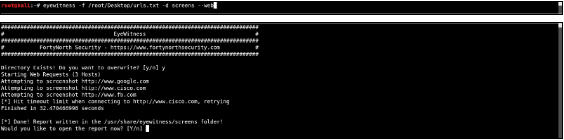

The tool used for visual identification is Eyewitness by Forty North Security.

EyeWitness is designed to take screenshots of websites, RDP services, and open VNC servers, provide some server header info, and identify default credentials if possible.

The basic syntax for usage is shown below:

./EyeWitness -f urls.txt --web

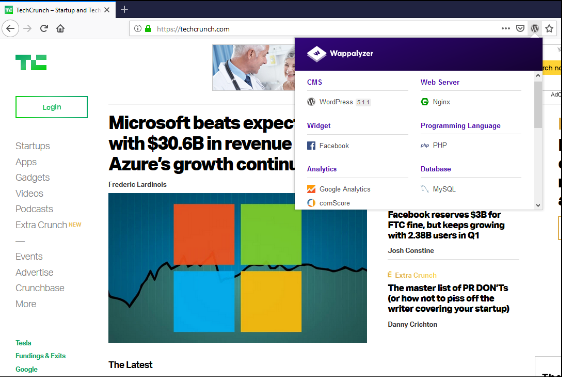

One important step to have an overall view on the target system is to check the technologies being used on that website.

Using this step we can check if the content management system being used is outdated or vulnerable, if the libraries being used are out of date etc.

In other words Platform Identification gives us a few added surfaces we can exploit.

Wappalyzer

Wappalyzer is a cross-platform utility that uncovers the technologies used on websites. It detects content management systems, ecommerce platforms, web frameworks, server software, database used, analytics tools and many more. It fingerprints software using unique patterns found in website source code, response headers, script variables and several other methods. Wappalyzer collects data anonymously and organically through the browser extensions. Download and install the wappalyzer browser extension. Now whenever you open the sites, it automatically scans the technologies been used.

All web application servers tend to have directories and files which are not accessible to the end users. These files/directories may have sensitive information about the applications, credentials stored. Enumeration allows us to uncover the hidden functionalities in web applications and hidden paths which can be further explored for vulnerabilities. We can also verify if the uncovered files and directories have proper permissions configured and if they leak any sensitive information.

A few useful tools are listed below:

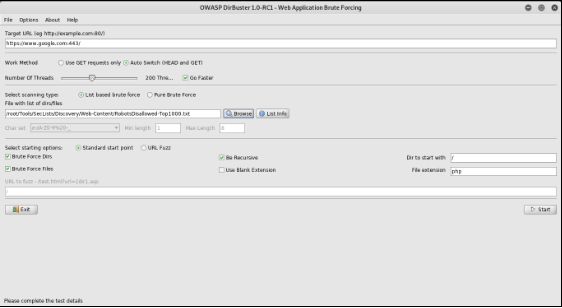

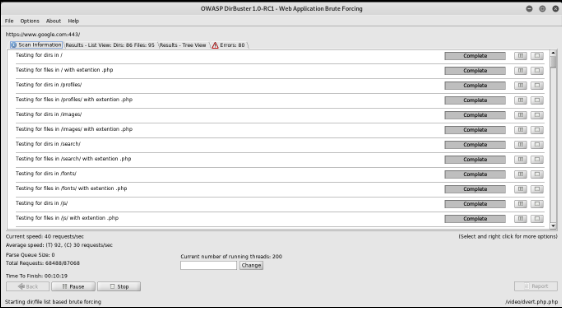

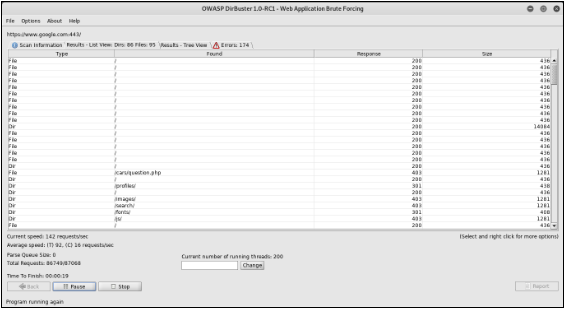

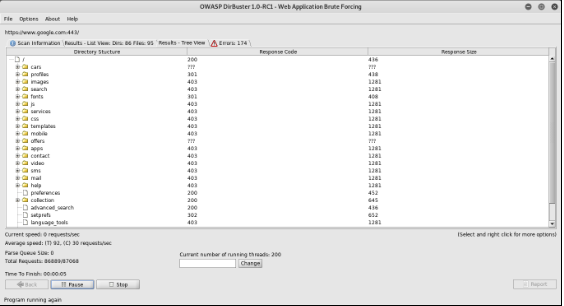

1. DirBuster

DirBuster is a multi threaded java application designed to brute force directories and files names on web/application servers. DirBuster also has the option to perform a pure brute force, which leaves the hidden directories and files nowhere to hide. This tool is inbuilt in kali. Usage:

Mention the URL you want in the target url section and load the wordlist file you want for brute force

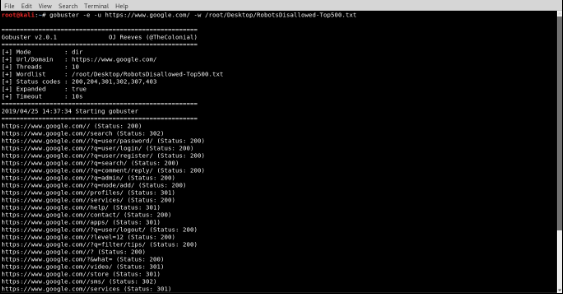

2. Gobuster

Gobuster is a tool used to brute force directories and files in websites. Gobuster can also retrieve the full path for a directory or file.

The syntax is:

Web applications use parameters (or queries) to accept user input, take the following example into consideration

http://testphp.vulnweb.com/artists.php?artist=1

This URL seems to load user information for a specific user id, but what if there exists a parameter named admin which when set to True makes the endpoint provide more information about the user? For this purpose we use a method known as parameter bruteforcing. The tools we can use for this purpose are shown below

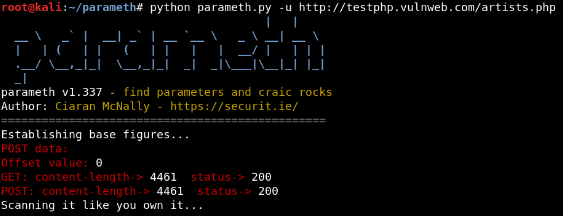

1. Parameth

This tool can be used to brute discover GET and POST parameters Often when you are busting a directory for common files, you can identify scripts (for example test.php) that look like they need to be passed an unknown parameter. This hopefully can help find them.

Downloads: https://github.com/maK-/parameth

Syntax for this tool is as shown below

parameth.py -u http://example.com

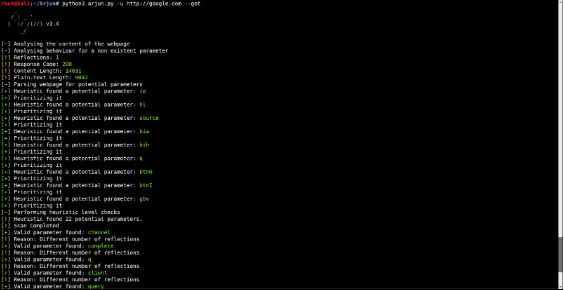

2. Arjun

Arjun finds valid HTTP parameters with a huge default dictionary of 25,980 parameter names. The best part? It takes less than 30 seconds to go through this huge list while making just 30-35 requests to the target.

Downloads: https://github.com/s0md3v/Arjun

Syntax: python arjun.py -u url --get